System Logs 101: Ultimate Guide to Mastering System Logs Now

Ever wondered what your computer is doing behind the scenes? System logs hold the answers—revealing everything from routine operations to hidden security threats. Let’s dive into the powerful world of system logs and unlock their full potential.

What Are System Logs and Why They Matter

System logs are digital footprints left behind by operating systems, applications, and network devices. Every time a service starts, a user logs in, or a system error occurs, a record is created. These records, collectively known as system logs, are essential for monitoring, troubleshooting, and securing IT environments.

The Core Definition of System Logs

System logs are timestamped records generated by software and hardware components within a computing environment. They document events such as system startups, shutdowns, errors, warnings, and user activities. These logs are typically stored in plain text or structured formats like JSON or XML, depending on the system.

According to the ISO/IEC 27001 standard, logging is a critical component of information security management. It ensures accountability and enables forensic analysis after incidents.

Types of Events Captured in System Logs

System logs capture a wide range of operational events. These include:

- Authentication attempts (successful and failed logins)

- Service startups and crashes

- File access and modification

- Network connection attempts

- System updates and patch installations

- Hardware failures or resource exhaustion (e.g., low disk space)

“Without logs, you’re flying blind in your IT environment.” — Anonymous Security Expert

Why System Logs Are Non-Negotiable in IT

Imagine managing a server farm without knowing when a service failed or who accessed sensitive data. System logs eliminate guesswork. They provide visibility into system behavior, support compliance with regulations like GDPR and HIPAA, and are indispensable during audits.

For example, in the case of a data breach, system logs can reveal the attack vector, timeline, and affected systems—critical information for incident response teams.

The Critical Role of System Logs in Security

Security teams rely heavily on system logs to detect, investigate, and prevent cyber threats. These logs act as the first line of defense, offering real-time insights into suspicious activities.

Detecting Unauthorized Access Through Logs

One of the most powerful uses of system logs is identifying unauthorized access. Failed login attempts, unusual login times, or logins from foreign IP addresses are red flags that can be spotted in authentication logs.

Tools like SIEM (Security Information and Event Management) systems aggregate logs from multiple sources and use correlation rules to detect anomalies. For instance, five failed SSH login attempts followed by a successful one might trigger an alert for a potential brute-force attack.

Forensic Analysis After a Security Breach

After a security incident, system logs become the primary source of truth. Digital forensics investigators use logs to reconstruct the timeline of an attack. They can determine when an attacker gained access, what systems were compromised, and what data was exfiltrated.

For example, Windows Event Logs can show PowerShell commands executed by an attacker, while Linux syslog entries might reveal privilege escalation via sudo abuse.

Compliance and Regulatory Requirements

Many industries are legally required to maintain system logs. Regulations such as the Health Insurance Portability and Accountability Act (HIPAA) and the General Data Protection Regulation (GDPR) mandate logging of access to sensitive data.

Organizations must retain logs for a specified period (e.g., 6 months to 7 years) and ensure they are tamper-proof. Failure to comply can result in hefty fines and reputational damage.

How System Logs Work Across Different Operating Systems

Different operating systems generate and store system logs in unique ways. Understanding these differences is crucial for effective log management.

Linux: The Syslog Standard and Journalctl

Linux systems traditionally use the syslog protocol to manage system logs. The /var/log/ directory contains various log files such as syslog, auth.log, and kern.log. Modern Linux distributions use systemd-journald, which provides a more structured logging system via the journalctl command.

For example, running journalctl -u ssh.service displays logs specific to the SSH service, making troubleshooting easier.

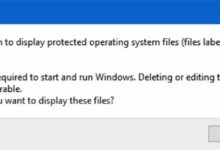

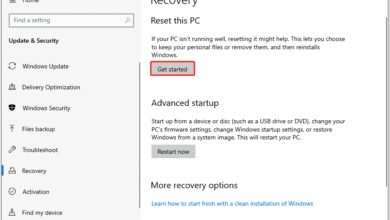

Windows: Event Viewer and Event IDs

Windows uses the Event Log service to record system events. The Event Viewer organizes logs into categories: Application, Security, and System. Each event is assigned a unique Event ID, which helps administrators quickly identify issues.

For instance, Event ID 4625 indicates a failed login attempt, while Event ID 4624 signifies a successful one. Microsoft provides a comprehensive Event ID reference guide for troubleshooting.

macOS: Unified Logging System

Starting with macOS Sierra, Apple introduced the Unified Logging System (ULS). This system consolidates logs from apps, the kernel, and system processes into a single, efficient database. The log command-line tool allows users to query logs with powerful filtering options.

For example, log show --predicate 'eventMessage contains "error"' --last 24h retrieves all error messages from the past day.

Common Tools for Managing and Analyzing System Logs

With the volume of data generated by system logs, manual analysis is impractical. Specialized tools help automate collection, storage, and analysis.

ELK Stack: Elasticsearch, Logstash, Kibana

The ELK Stack is one of the most popular open-source solutions for log management. Elasticsearch indexes logs for fast searching, Logstash processes and enriches log data, and Kibana provides interactive dashboards.

For example, a DevOps team can use Kibana to visualize error rates across microservices in real time. The ELK Stack is highly scalable and integrates well with cloud environments.

Graylog: Centralized Log Management

Graylog offers a user-friendly interface for centralized log collection and analysis. It supports message extraction, alerting, and role-based access control. Graylog is ideal for mid-sized organizations that need powerful log analytics without the complexity of the ELK Stack.

It can ingest logs via Syslog, GELF (Graylog Extended Log Format), or Beats, and allows users to create custom dashboards and extractors.

Fluentd and Fluent Bit: Lightweight Log Collectors

Fluentd and its lightweight counterpart, Fluent Bit, are open-source data collectors designed for unified logging layers. They support over 500 plugins, enabling integration with various sources and destinations.

Fluent Bit is especially useful in containerized environments like Kubernetes, where resource efficiency is critical. It can collect logs from Docker containers and forward them to Elasticsearch or cloud storage.

Best Practices for Collecting and Storing System Logs

Effective log management isn’t just about collecting data—it’s about doing it securely, efficiently, and sustainably.

Centralized Logging: Why It’s Essential

Storing logs on individual machines is risky. If a server is compromised or crashes, logs may be lost. Centralized logging—sending logs to a dedicated server or cloud service—ensures data integrity and simplifies analysis.

Solutions like rsyslog and syslog-ng can forward logs from multiple sources to a central repository. This setup improves security and enables cross-system correlation.

Log Rotation and Retention Policies

Logs grow quickly. A single server can generate gigabytes of logs per day. Without rotation, disk space will fill up, potentially crashing the system.

Log rotation involves archiving old logs and deleting them after a set period. Tools like logrotate on Linux automate this process. Retention policies should align with compliance requirements—e.g., keeping logs for 90 days for PCI-DSS or 7 years for HIPAA.

Securing Log Data Against Tampering

Logs are only trustworthy if they’re secure. Attackers often delete or alter logs to cover their tracks. To prevent this, logs should be transmitted over encrypted channels (e.g., TLS) and stored in write-once, read-many (WORM) storage.

Additionally, access to log servers should be strictly controlled using role-based permissions. Hashing and digital signatures can further ensure log integrity.

Advanced Techniques: Real-Time Monitoring and Alerting

Modern IT environments demand real-time visibility. Waiting for a system to fail before checking logs is no longer acceptable.

Setting Up Real-Time Log Monitoring

Real-time monitoring involves continuously analyzing incoming log data for specific patterns. Tools like Prometheus with Grafana, or commercial platforms like Datadog, can monitor logs and metrics simultaneously.

For example, a rule can be set to trigger an alert whenever the word “CRITICAL” appears in an application log, allowing immediate response.

Creating Effective Alerting Rules

Not all log entries require attention. Effective alerting avoids noise by focusing on high-impact events. A good alert rule should be specific, actionable, and measurable.

Examples include:

- More than 10 failed login attempts in 5 minutes

- Disk usage exceeds 90%

- Service downtime detected

Alerts should be sent via email, SMS, or integrated with incident management tools like PagerDuty.

Using Machine Learning for Anomaly Detection

Advanced systems now use machine learning to detect anomalies in system logs. Instead of relying on predefined rules, ML models learn normal behavior and flag deviations.

For instance, a model might notice that a server usually receives 100 requests per minute but suddenly spikes to 10,000—indicating a potential DDoS attack. Platforms like Splunk and IBM QRadar offer built-in ML capabilities for log analysis.

Challenges and Pitfalls in System Logs Management

Despite their benefits, managing system logs comes with significant challenges.

Log Volume and Data Overload

Modern systems generate massive amounts of log data. A single cloud environment can produce terabytes of logs daily. This volume makes storage expensive and analysis slow.

Solutions include log sampling, filtering irrelevant entries, and using compression algorithms. Cloud providers like AWS offer cost-effective storage options such as S3 Glacier for archival logs.

Log Format Inconsistency

Different applications and devices use different log formats. Some use JSON, others plain text, and many have custom formats. This inconsistency complicates parsing and analysis.

Normalization tools like Logstash or Fluentd can convert logs into a standard format, making them easier to query and correlate.

False Positives in Alerting

Overly sensitive alerting rules can generate false positives—alerts that don’t indicate real problems. This leads to alert fatigue, where teams start ignoring warnings.

To reduce false positives, fine-tune rules based on historical data and use contextual information. For example, a failed login from a known IP during business hours may not be suspicious.

Future Trends in System Logs and Log Management

The field of log management is evolving rapidly, driven by cloud computing, AI, and DevOps practices.

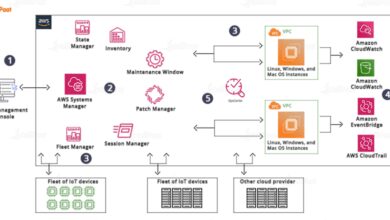

Cloud-Native Logging and Observability

As organizations move to the cloud, traditional logging approaches are being replaced by cloud-native observability platforms. Tools like AWS CloudWatch, Google Cloud Logging, and Azure Monitor provide integrated logging, monitoring, and tracing.

These platforms support auto-scaling, seamless integration with container orchestration (e.g., Kubernetes), and advanced analytics.

AI-Powered Log Analysis

Artificial intelligence is transforming how we analyze system logs. AI can automatically categorize log entries, predict failures, and even suggest remediation steps.

For example, Google’s Cloud Operations suite uses AI to detect anomalies and reduce mean time to resolution (MTTR).

The Rise of Observability Over Traditional Monitoring

Observability goes beyond monitoring by allowing teams to ask arbitrary questions about system behavior. It combines logs, metrics, and traces (distributed tracing) to provide deep insights.

Modern observability platforms like Datadog, New Relic, and Grafana Loki enable engineers to understand not just *that* a system failed, but *why* it failed.

What are system logs used for?

System logs are used for troubleshooting system errors, monitoring security events, ensuring compliance with regulations, and analyzing system performance. They provide a detailed record of what happens within a computing environment, enabling administrators to detect issues, investigate incidents, and optimize operations.

How long should system logs be kept?

The retention period for system logs depends on regulatory requirements and organizational policies. For example, HIPAA requires logs to be kept for 6 years, while PCI-DSS mandates 90 days. Many organizations retain logs for 1 to 7 years for security and audit purposes.

Can system logs be faked or tampered with?

Yes, system logs can be tampered with if not properly secured. Attackers may delete or alter logs to hide their activities. To prevent this, logs should be stored centrally, transmitted over encrypted channels, and protected with access controls and integrity checks like hashing.

What is the difference between logs and events?

An event is a single occurrence in a system (e.g., a user login), while a log is a record of that event. Logs collect multiple events over time and provide context such as timestamps, severity levels, and source information. Events are the ‘what,’ logs are the ‘documentation.’

Which tool is best for analyzing system logs?

The best tool depends on your needs. For open-source solutions, ELK Stack and Graylog are excellent. For enterprise environments, Splunk and IBM QRadar offer advanced features. Cloud users may prefer AWS CloudWatch or Google Cloud Logging. Fluentd and Fluent Bit are ideal for lightweight, high-performance log collection.

System logs are far more than technical records—they are the heartbeat of modern IT infrastructure. From detecting cyber threats to ensuring regulatory compliance, they provide invaluable insights into system behavior. As technology evolves, so too will the tools and techniques for managing logs. Embracing best practices in log collection, analysis, and security is essential for any organization serious about reliability and safety. Whether you’re a system administrator, security analyst, or DevOps engineer, mastering system logs is a critical skill in today’s digital landscape.

Further Reading: